AI is not intelligent and needs regulation now

This op-ed was published in The Hill Times on September 27, 2023.

Reposted with permission from The Hill Times.

By Rhonda MacEwen, president of Victoria University in the University Toronto

The word “intelligence” has no place in what we now ubiquitously refer to as “artificial intelligence”. The term was coined in the 1950’s, at a time when we were only beginning to explore whether one could distinguish between human interaction with another human or with a computer. This describes the basic tenants of Alan Turing’s test, and it became both an idea and a challenge for scientists. The use of the word intelligence was a provocation or a simplification, however it has had a lasting effect on the field. Today, when presented with information from an AI generated tool it is often noted that machines are doing what humans can do. True, yet it is at the moment less about intelligence and more about fast pattern recognition. Computation using predictions of what best follows the pattern based on millions of examples. It does correctly indicate that humans are mostly predictable. Mostly.

And this is where the benign ends. These tools are reaching a sophistication that can make what we see and hear online hard to perceive. If you have seen the deepfakes of Hillary Clinton endorsing Ron DeSantis, or the one of Volodymyr Zelensky surrendering, you will appreciate that while low-level pattern recognition is not intelligence, it can be damaging. With the increase in claims of foreign interference and election manipulation, there are dangerous implications for our world as these technologies mature. AI is destabilizing the foundation of the trust which we rely on to secure societies built on democratic values and human rights.

Researchers and scientists are saying that the time has come, and indeed, is overdue, to regulate AI legislatively to halt the further erosion of foundational principles in our world. Many will bristle and suggest that legislation will limit the creative potential of the technology or limit free speech, however, the CRTC is an example of how impactful regulation can protect against public harm from what can often feel like the wild west. My warnings join a chorus of voices, many, who like me who work in Canadian Universities focused on harnessing this technology for benevolent purposes.

Like AI, human intelligence is iterative, and is built on data inputs – information. However, the human brain processes the data in a way that also reflects understandings of context, subtext, and perspective – features that AI lacks. The absence of context and therefore moral frameworks in AI make it a very efficient tool in the hands of those who want to cause harm, curb human rights and democracies and commit crimes. While in the early 1990’s the drive for internet innovation led to a choice to forego regulation and tread lightly on policy – the opposite of how we managed radio, television, journalism, and film media – even the more reticent among us are saying that it is time to revisit this choice.

Canadian political and bureaucratic leaders can rely on Canada’s leading academics focused in this area to help create a regulatory framework that not only serves as a beacon globally, but as a catalyst for meaningful change. It may seem daunting in a world where global leaders regularly use AI to suppress and abuse their own citizens, but this is an area that Canadians are well equipped to make a difference through well established and highly respected diplomatic channels.

The risk of not acting now is, as leading academics have already noted, taking us on a very precarious path across the broad spectrum of human life. The iterative nature of AI means that without meaningful regulation, it will become easier for the average person to have the power to cause very serious public harm, should they so wish.

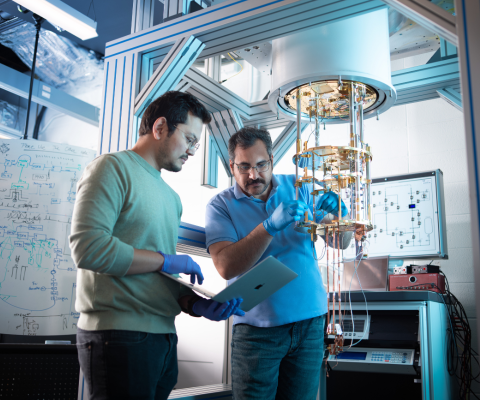

In keeping with early global leadership in the development of AI and machine learning, Canadian universities are advancing the application of AI in areas such as healthcare, basic science, computational analytics, manufacturing and financial services, that will have a transformational impact, driving innovation and economic growth. However, regulation in these promising areas will ensure that that the hoped-for outcomes are fulfilled. History has shown us that some of the most promising discoveries and innovations can cause harm in the absence of regulation.

Dr. Rhonda N. McEwen is the president of Victoria University in the University of Toronto and Canada Research Chair in Tactile Interfaces, Communication and Cognition. Dr. McEwen is an expert on emerging technologies, and is co-editor and contributing author of the recently published SAGE Handbook of Human-Machine Communication.

About Universities Canada

Universities Canada is the voice of Canada’s universities at home and abroad, advancing higher education, research and innovation for the benefit of all Canadians.

Media contact:

Lisa Wallace

Assistant Director, Communications

Universities Canada

[email protected]

Tagged: Research and innovation

Related news

-

Urgent action for our publicly-funded universities critical to Canada’s economic stability and growth

-

Outstanding discoveries by Black researchers in Canada

-

Universities are advancing technology through international partnerships

-

Global university partnerships are finding solutions to the climate crisis